This past week at The International I managed to get some time with the Valve/HTC virtual reality project, the Vive. I’ve never tried VR before, but tales of friends who have Oculus Rift developer kits and the general progress that the industry has made really got me interested.

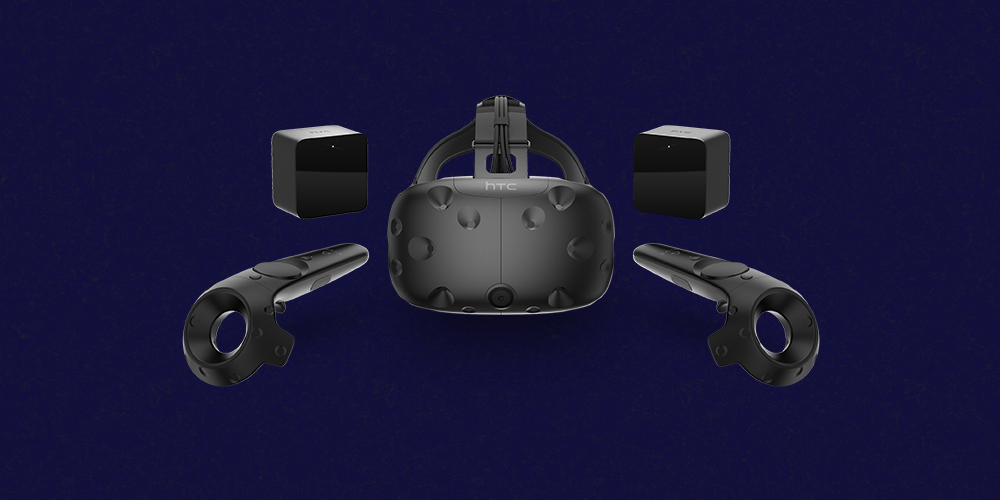

The Vive is a combination of a headset/visor and earphones that you wear; it has a “tail” of cables that come out of the back of the visor and really gives the vibe of being plugged into The Matrix; keeping in mind where the cables are when you’re moving around the Vive environment is crucial, not only to break from immersion, but because I wanted to keep from possibly breaking the demo model.

After signing into a desk for the demo, I was taken into a room alone with the presenter; the room had four laser projectors on poles, which I was told were to coat the activity area with something for the headset to reference. In essence, it was like the Wii, where an IR emitter (the Wii bar) was used with a sensor (in the remote) to determine where you are, what you’re facing, and what to show you. After some research, it looks like these emitters will be mounted on walls in a consumer usecase, because I’d imagine setting them up in a house might be awkward.

Articles have stated that these emitters will be able to sense where objects are in a room and plan accordingly, so you won’t need to have a specific blank space to interact in. If you do reach a boundary inside the demonstration, a grid of light lines comes up, signalling that you’re hitting a “wall”.

Putting on the visor, I was taken into a test environment that had a lobby of different scenarios. It was kind of like the Wii’s home screen, but closer to an art gallery. I was given two controllers, and tested some buttons: eventually a ballon blew out of my left controller, and I was able to bat it around, watch it float upward, and blow new ones. The left controller also had a wheel to choose balloon colours, which was responsive and gave enough feedback that you felt you were actually there.

A large concern for me coming into this demo (and VR in general) was the immersiveness of it. Things like me wearing glasses caused worries that I would not be able to kind of lose myself in what was being presented; that ended up not being a factor at all (here’s a photo that I snuck of me wearing it).

All in all, I do not hesitate to say it was a life-changing experience.

Numerous demos showed off different things. I explored a sunken ship’s bow where minnows swam away from my “hands”, assembled a sandwich in a kitchen to show off interactivity between objects, painted with light in a 3D space and explored a Secret Shop, straight out of Dota 2.

Despite the environments not looking completely photorealistic, everything just kind of sucked me in. The audio and the resolution of the eyepieces were at such high quality that eventually I kind of forgot what I was doing; I was largely pre-occupied with the demos, but I remember having the biggest grin on my face because one of the most attractive tropes of science fiction was actually here.

The controllers have thumb pads for things like menu selection and fine manipulation, as well as side and bottom triggers for things like grip. There was never any virtualization of arms or legs, but sometimes the implements made a big deal, since menus and options were projected onto them.

With the light painting, I could spin menus on my left implement with my right, dabbing as if I was choosing paint off a palette. In the Secret Shop, I got my own light source, and moving it around the environment cast dynamic shadows and could cause reactions from simulated characters.

These moments just felt… amazing. Discovering new things and fighting the conditioning that you’ve learned from “normal” video games was exhilarating: simple things like reaching into a giant soup pot and realizing that there was no awkward collision mechanics just blew my mind.

In that cooking mini-game, I noticed how much I relied on games to fill in complicated blanks, which I actually had to do here. Reaching deep into a pot instead of waiting for an ingredient to snap to my hand, or just not having a “press A to pick up” prompt just felt alien; this is a good thing, because it signals that this medium will actively change how we approach interaction in a digital space, and from a futurism perspective, it’s crazy to think that it will be available now, instead of twenty years.

I know they were just tech demos, but I couldn’t help but think about the possibilities of using a Google Sketchup open-source marketplace to design and publish models that could be brought into a VR environment and then manipulated, annotated, or studied. I thought about the blue whale I saw on the ship’s deck, and how we could simulate an aquarium without the need to house animals.

I thought about the implications of a virtual reality-influenced existence, where people may get addicted to an ideal, controlled environment.

That last point is obviously a job for more accomplished philosophers than me, and is kind of scary to delve into.

This demo instantly made me a believer that VR could be done right, and has kickstarted a little bit of a fire in me. I want to see where this medium goes, and I want to grow up alongside it, so to speak; depending on the price, I might just be an early adopter, but I can’t wait to see what five or ten years will do for it.

Until then, it’s just a matter of waiting.

Leave a Reply